Pre-Pandemic Timeline

1980 to 1999

Chronological order of significant global data points in the years leading up to the COVID-19 Pandemic

Navigate the timeline pages:

1800s | 1900-1945 | 1946-1997 | You Are Here | 2000-2015 | 2016-2018 | 2019 | 2020 | 2021 | 2022 | 2023

Best viewed on a large screen

Data points are continuously being added so please come back again soon.

The Human Genome Project is launched

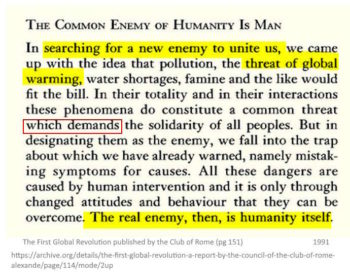

The Club of Rome decide the “new enemy” will be “humanity itself”

Sometime in 1991 in the Post Cold War era, the Club of Rome (a globalist think tank) published The First Global Revolution which in it they wrote “we came up with the idea that pollution, the threat of global warming…and the like“ would become the “new enemy to unite us” and that “these phenomena do constitue a common threat which demands the solidarity of all peoples“. They went on to state “all these dangers are caused by human intervention“…“humanity itself” is “the real enemy”. [1]

The following year in June 1992, Brundtland’s “Our Common Future” report formed the basis for Agenda 21 at the UNCED Earth Summit.

The following year in June 1992, Brundtland’s “Our Common Future” report formed the basis for Agenda 21 at the UNCED Earth Summit.

Where the UNFCCC was signed which “established an international environmental treaty to combat “dangerous human interference with the climate system”.

UN OCHA is established

On December 19, 1991 at the United Nations General Assembly the members adopt UN resolution 46/182, which called for stronger international leadership in response to complex emergencies and natural disasters as a result the UN Office for the Coordination of Humanitarian Affairs (OCHA) was established. [1, 2]

OCHA’s Relief Web news site reports on relief activities such as profits made by mRNA COVID-19 vaccine manufactures while poor countries remain unvaccinated!

WEF started the Young Global Leaders program

The World Economic Forum (WEF) (a private enterprise founded in 1971 by Klaus Schwab) started Global Leaders for Tomorrow school in 1992, which was re-established in 2004 as the Young Global Leaders (YGL) program. Attendees must apply for admission and are then subjected to a rigorous selection process. [7, 8, 9]

The program is commitment is to “improving the state of the world”, which means grooming their acolytes and helping them “penetrate” positions of influence and government around the world. [4, 5, 6]

The first class had in it Angela Merkel, Bill Gates, later Aznar (former Spanish PM), Macron, Jens Spaan, Justin Trudeau and Jacinda Ardern. [1, 2]

Prelude to Agenda 21: The Convention on Biological Diversity adopted at Nairobi Conference

In response to the threat of species extinction caused by human activity and inspired by the world community’s growing commitment to sustainable development, the United Nations Environment Program (UNEP), led by Maurice Strong, convened the Ad Hoc Working Group of Experts on Biological Diversity in November 1988 to explore the need for an international convention on biological diversity. The UNEP established the Ad Hoc Working Group of Technical and Legal Experts to prepare an international legal instrument for the conservation and sustainable use of biological diversity. [1, 2, 5]

By February 1991, the Ad Hoc Working Group had become known as the Intergovernmental Negotiating Committee. At the May 22, 1992 Nairobi Conference the “agreed text” was adopted for the Convention on Biological Diversity (CBD)

The CBD was opened for signatures on June 5, 1992 at the United Nations Conference on Environment and Development (UNCED) (the Rio “Earth Summit”) and by June 4, 1993 it had received 168 Member State signatures. The Convention entered into force on December 29, 1993. [4]

Following the convention, they identified a need for “a practical tool for translating the principles of Agenda 21 into reality,” – The UNEP created the Global Biodiversity Assessment book which was published in 1995 by Cambridge University. This book “takes Agenda 21 and breaks down what each Nation is going to look like to save the planet…it tells you what is not sustainable, and therefore needs to be limited or eliminated. [3]

UNCED Earth Summit – Agenda 21

In 1987 the Trilateral Commission’s member Gro Harlem Brundtland presnted to the World Assembly the World Commission on Environment and Development task force’s report called Our Common Future. This report defined and popularized the term Sustainable Development for world consumption. [1]

From June 3-14, 1992 the United Nations Committee for Environment and Development (UNCED) ‘Earth Summit‘ was held in Rio de Janeiro, Brazil. It was organised by co-author, Maurice Strong, who is also the UNEP secretary general. [2, 3, 4]

The United Nations Framework Convention on Climate Change (UNFCCC) was signed which “established an international environmental treaty to combat “dangerous human interference with the climate system”, in part by stabilizing greenhouse gas concentrations in the atmosphere” [8]

Also at this conference “The Agenda for the 21st Century” (Agenda 21) was born. Brundtland’s report received praise and accolades from the UN for providing the framework for a sustainable future. [5, 6, 7]

Officially “Agenda 21 is the framework for activity into the 21st century addressing the combined issues of environment protections and fair and equitable development for all.”

According to extensive research by Patrick Wood, “Agenda 21 is a … comprehensive blueprint specifically designed to change our way of life and our form of government.” And as Rosa Koire [9] states the Agenda 21 sustainable development is the:

“…inventory and control plan of all land, water, all minerals, all plants, all animals, all construction, all means of production, all food, all energy, all information and all human beings.“

In 2015, Agenda 21 was significantly expanded to become Agenda 2030, a 15 year plan to achieve 17 Sustainable Development Goals (SDGs).

The new “sustainable” or “green” economic paradigm is highly correlated with the original 1934 specification for Technocracy, namely, that it is a resource-based economic system that uses energy as the “currency”. Think “Carbon-Credits, “Smart Cities”, and know that the UN documents stress the doctrine of ‘no one left behind.”

A UN publication “Agenda 21: The Earth Summit Strategy to Save our Planet” states “Agenda 21 proposes an array of actions which are intended to be implemented by every person on Earth…it calls for specific changes in the activities of all people… Effective execution of Agenda 21 will require a profound reorientation of all humans, unlike anything the world has ever experienced.”

The Earth Summit allowed the World Conservation Bank to become a reality. Now known as the “Global Environment Facility” (GEF), is the largest public funder of global environmental projects. The GEF is the financial mechanism for the United Nations Framework Convention on Climate Change (UNFCCC), the organizing convention directing the Intergovernmental Panel on Climate Change (IPCC).

George Hunt attended meetings leading up to this Earth Summit, on May 1, 1992 he released a video warning of what he witnessed.

The Convention on Biological Diversity at UN Earth Summit

The Convention on Biological Diversity (CBD) was opened for signatures on 5 June 1992 at the United Nations Conference (UNCED) on Environment and Development (the Rio “Earth Summit”) and entered into force on 29 December 1993 as a legally binding global treaty which Australia signed.

The CBD is said to be “inspired by the world community’s growing commitment to sustainable development.” the result of UNEP ad hoc intergovernmental workshops starting in 1988, including a sub-workshop on biotechnology as “a valuable contribution to resource conservation and sustainable development.”

At it’s core, the Biodiversity Convention’s “true mission was capturing and using biodiversity for the sake of the biotechnology industry,” and “protecting the pharmaceutical and emerging biotechnology industries”. [1, 2]

“[T]he convention implicitly equates the diversity of life – animals and plants – to the diversity of genetic codes, for which read genetic resources. By doing so, diversity becomes something modern science can manipulate.” [3, 4]

This convention treaty sets up the justification for classifying, mapping and cataloguing nature down to it’s genetic code using emerging technologies from that time.

Starting with the Global Taxonomy Initiative (GTI), justified as “taxonomy is essential to implementation of the Convention on Biological Diversity,”

- 1986 – US DOE Office of Science launched the Human Genome Project, justified to help “Hiroshoma” victims, but really because biology now had new tools!

- 1990 Human Genome Project oficially begins

- 1990 PhyloCode theoretical foundation

- 2000 PhyloCode first draft includes “Provisions for Hybrids”

- 2003 DNA barcoding was conceived,

- 2004 International Phylogenetic Nomenclature meetings began

- 2008 The International Barcode of Life Consortium (iBOL) began

Two scientists who attended the conference wrote pg 42 of the book The Earth Brokers:

“The Convention implicitly equates the diversity of life – animals and plants to the diversity of genetic codes. By doing so, diversity becomes something modern science can manipulate. It promotes biotechnology as being ‘essential for the conservation and sustainable use of biodiversity” – WATCH

Global Harmonization Task Force is conceived

In September 1992 “senior regulatory officials and industry representatives from the European Union, the United States, Canada and Japan met in Nice, France to explore the feasibility of forming such a global consultative partnership aimed at harmonizing medical device regulatory practices” and so was conceived the Global Harmonization Task Force (GHTF). [1, 2]

GHTF is “a voluntary international consortium of public health officials responsible for administering national medical device regulatory systems and representatives from the regulated industry.” [3]

“During the second meeting of the GHTF, held in Tokyo, Japan, in November 1993, representatives from Australia joined the organization and Study Group 4 was founded to develop guidance on harmonized regulatory auditing practices.” [5]

On October 2011 the “task force” becomes the International Medical Device Regulators Forum (IMDRF), when the Chair and Secretariat are from Australia’s TGA. [4]

Legislation allows FDA to be funded by Pharmaceutical giants

On September 22, 1992, the US Congress passed H.R.5952, the Prescription Drug User Fee Act (PDUFA), which was signed into law October 29, 1992. This Act allowed the pharmaceutical sponsor to “fund the US Food and Drug Administration (FDA) directly through “user fees” intended to support the cost of swiftly reviewing drug applications. With the Act, the FDA moved from a fully taxpayer funded entity to one supplemented by industry money. The PDUFA fees collected have increased 30 fold—from around $29m in 1993 to $884m in 2016.” PDUFA fee schedule is renewed every five years. [1, 2, 3]

New drugs have patents, which have a time limit before they expire, and research and development (R&D) costs cannot be recouped until a product gains regulatory approval. The FDA estimated a 30 day delay could cost the drug sponsor $10 million, and the regulator already had a backlog of drugs awaiting approval. A faster regulatory process was argued to be in the interest of the manufactures (lower cost to market), the regulator (fund more staff) and consumers (life saving drugs!). [1]

Spurred on by the AIDS and Cancer epidemics a presidential advisory panel on drug approval reported on August 16, 1990 that it “estimated that thousands of lives were lost each year due to delays in approval” and suggested it “should speed up approval of experimental AIDS and cancer drugs by requiring less evidence of the drugs’ effectiveness before they are put on the market”. [4, 5]

The regualtory staff are effectively hired by the pharmaceutical company to appove and market their products.

Between 1993 and 2003 the median approval time for priority New Drug Applications (NDA) and Biologics License Applications (BLA) decreased by over half — from 13.2 months to 6.4 months respectively, and standard approvals by one third from 22.1 months to 13.8 months. They increased efficiencies “while holding to the same high standard of evidence for drug safety and effectiveness”. PDUFA funding enabled increased review staff numbers to increase FDA-sponsor interactions for scientific and regulatory consultation at a number of critical milestones through-out drug development prior to submission (fig 3.1). [6]

On December 7, 1995 the FDA approved Roche Laboratories’ saquinavir, the first of a new category of drugs called protease inhibitors designed to prevent the HIV virus from replicating, in combination with older nucleoside analogue medications such as AZT, it took the FDA only 97 days after the agency received the marketing application to grant the red light. [5]

In June 2020 the British Medical Journal (BMJ) asked six leading global regulators in Australia, Canada, Europe, Japan, the UK, and US, “questions about their funding, transparency in their decision making (and of data), and the rate at which new drugs are approved” and they found that “industry funding of drug regulators has become the international norm.”

The concept of Evidence-Based Medicine is born

A paper published November 4, 1992 by Guyatt et al called “Evidence-Based Medicine: A New Approach to Teaching the Practice of Medicine” with the stated intent of Evidence-Based Medicine (EBM) being “to shift the emphasis in clinical decision making from “intuition, unsystematic clinical experience, and pathophysiologic rationale” to scientific, clinically relevant research.” And so began the EBM movement.

Through the years EBM displaced “the doctor-patient unit as the ultimate decision-making authority”. EBM was quickly “hijaked” by industry to ” serve agendas different from what it originally aimed for.” To promote industry products through “clinical practice guidelines.” The guidelines are determined by a “consensus of “experts” who are often captured by industry funding, which “ironically, many guideline recommendations are based on low quality, or no evidence,” and fall short of trustworthy standards.

EBM “relies heavily on studies of large populations and therefore statistics, which are inherently unreliable and easy to manipulate“, yet these population level studies are used to “dictate” a one-size fits all treatment – ignoring clinical experience and individual patient needs. [2]

A cornerstone of EBM is the hierarchical system of classifying evidence known as the levels of evidence, where randomized controlled trials (RCT) are considered the highest level and case series or expert opinions are the lowest level. [1]

He who controls the “evidence” controls “the science” and then in turn “influences” third party “health officials” to formulate clinical practice guidelines (protocols) that hospital administrators then force their doctors to follow! [3, 4, 5]

Read more >>>

Biotechnology Industry Organization (BIO) is created

In 1993 the Biotechnology Industry Organization (BIO) is created through the merger of the Industrial Biotechnology Association and the Association of Biotechnology Companies both small Washington-based biotechnology trade (and lobby) organizations, who hired Washington veteran Carl B. Feldbaum as their first president. In 1993 there were but a handful of biotechnology drugs and vaccines on the market and the human genome project was only recently begun.

BIO hosts the largest biotechnology annual event in the world, which “dates back to 1987, when the Association of Biotechnology Companies hosted an international conference in Washington, D.C., that exceeded expectations by attracting 155 attendees”, which grew to 1,400 in 1993 and 14,000 by 2001. [1]

- Early BIO timeline HERE

- BIO lobbies the FDA – WATCH, and helps support FDA‘s efforts! WATCH

- BIO is working to “combat” legislations/policy that doesn’t work in their favour of biotechnology and science, with Big Pharma as their funding partners.

- BIO targeting adult vaccination, to get everyone! – WATCH

- BIO making short films to influence opinion? – WATCH

Fast forward 2021 to the COVID-19 pandemic where a new bio-pharma mRNA injectable platform product was accelerated through the regulatory agencies under emergency use, where otherwise no regulatory pathway had been established for a gene therapy vaccine, this lobby group, “BIO”, who’s members include Pfizer, Moderna, Gilead and more, funded the “public health nonprofit” “innovative media” organisation called the Public Good Projects (PGP) in turn paid for pro-vaccine action and the take down of counter-narrative doctors and nurses. [2, 3]

World Trade Center – Twin Tower Bombing

On 26th February 1993, just one month after President Clinton’s inauguration, just after noon a bomb went off in the “parking garage beneath the World Trade Center,” killing six people and injuring more than 1,000 others. [1, 2, 3]

“This event was the first indication for the Diplomatic Security Service (DSS) that terrorism was evolving from a regional phenomenon outside of the United States to a transnational phenomenon”. The FBI stated the “Middle Eastern terrorism had arrived on American soil—with a bang”.

This “terrorist attack” comes just 3 years after the fall of the Berlin Wall, an event that marks the end of the US-Soviet Union Cold War, and with it an end to the justification of a huge military budget with no enemy.

But on July 1, 1994, President Clinton introduced the National Security Strategy as a way to “sustain our active engagement abroad” [4, 5]

With few, if any, military threats to the US, Clinton defined security as including “protecting … our way of life” in order to seize the new opportunities for prosperity presented in the post-Cold War environment. The general approach of this strategy is “globalist” with “selective engagement” in areas and events in which the US has “particular interest”.

The World Wide Web is launched into the public domain

On April 30, 1993, CERN in Switzerland, declared the underlying code for the World Wide Web (WWW or W3) to be made available on a royalty-free basis, forever, thus launching the WWW into the public domain. [1]

Sir Tim Berners-Lee a British computer scientist based at CERN is credited with invented the World Wide Web in March 1989 following his Information Management “Proposal“. His aim was to “work toward a universal linked information system” creating a universal computing language so as to share information between the interconnected network of computers (the internet) all around the world. Berners-Lee realised they could share information by exploiting an emerging technology called hypertext. [3, 6]

By October of 1990, Tim had written the three fundamental technologies that remain the foundation of today’s web:

- HTML: HyperText Markup Language.

- URI: Uniform Resource Identifier, commonly called URL

- HTTP: Hypertext Transfer Protocol

By the end of 1990, the first web page was served on the open internet, and in 1991, people outside of CERN were invited to join this new web community. Following CERN’s 1993 code release, Tim moved to the Massachusetts Institute of Technology (MIT) in 1994 and founded the World Wide Web Consortium (W3C), an international community devoted to developing open web standards. He remains the Director of W3C to this day. [2]

The history of the internet can be traced back to 1945. [4, 5]

With the advent of the World Wide Web together with search engines such as Google, all manner of information (true, false and in-between) became increasingly and instantly accessible to anyone with a computer.

WHO: Public-Private Partnerships begin

On May 3, 1993 the World Health Assembly called on the World Health Organisation (WHO) to encourage the support of all partners in health development, including non-governmental organizations (NGO) and private voluntary organisations – thus marking the begining of the public-private partnerships. WHO members raised concern of vested interests influencing decisions, but alleged safeguards are put in place to remedy this possibility. [1, 2]

The WHO knew that “over time informal exchanges may lead to the development of formal relations between WHO and NGOs”. [3, 4] Which by 2002 was already a huge list.

Three months after taking office as WHO Director General and armed with her promise to “change the course of things” and “make a difference“, Dr Gro Harlem Brundtland initiated a meeting with ten senior pharmaceutical representatives of the International Federation of Pharmaceutical Manufacturers Association (IFPMA) who, on October 21, 1998, decided join forces to help 100 million people world wide who are “deprived of easy access to the most essential drugs and vaccines”.

The United Nations in 2012 reported the growing concern about the “influence of major corporations and business lobby groups within the UN.”

Inventor of PCR test wins Nobel Prize in Chemistry

In 1983, Kary Mullis figured out the steps to amplify DNA sequences called process called polymerase chain reaction (PCR). On October 13, 1993 he was awarded the Nobel Prize in Chemistry for developing this procedure. [watch] [1]

His prizes and patents can be found here.

Kary Mullis said PCR test is used to “make a whole lotta somethin’ out of somethin’, and the “PCR test, if you do it well, you can find almost anything.”

PCR tests are being used to “diagnose” COVID-19 disease, in both symptomatic and asymptomatic (healthy) people to determine if they are infectious. The PCR test kits are not standardised, each one uses a different, tiny fragment of the SARS-CoV-2 virus genome, it doesn’t test for the whole virus and it cannot determine if the fragment is part of a viable infectious pathogen. [2] Greater than 20 cycles are problematic, 45 cycles was recommended for SARS-CoV-2 testing protocol.

The PCR technique can produce a billion copies of the target sequence in just a few hours. [3]

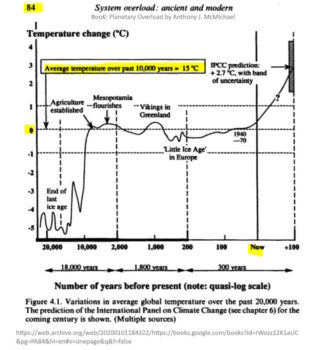

Average temperature over past 10,000 years = 15°C (Until Hansen simply changed it to 14!)

In November 1993 “Anthony J. McMichael, an activist professor whose e-mail promising to pull strings in the Australian government figured in the Climategate scandal”, published his book Planetary Overload: Global Environmental Change and the Health of the Human Species, where he documented the average global temperature as 15 degrees celsius. This appears to be the last time this 10,000 year average figure was published. [1]

In November 1993 “Anthony J. McMichael, an activist professor whose e-mail promising to pull strings in the Australian government figured in the Climategate scandal”, published his book Planetary Overload: Global Environmental Change and the Health of the Human Species, where he documented the average global temperature as 15 degrees celsius. This appears to be the last time this 10,000 year average figure was published. [1]

“As it turned out, the average temperature of 15 degrees Celsius was an inconvenient value that did not support the allegations of global warming.” On January 18, 1998, James Hansen, via an email to Worldwatch Institute, “the leaders of the global warming movement” told them that a “better base number” would be 14 degrees C. Around this time a zero point started to appear in the graphs, and has done since in IPCC reports. [2, 3]

“It is hard to pinpoint the exact date when the CHANGE from 15 degrees to 14 degrees was introduced. It most likely happened sometime in 1997, and definitely no later than January of 1998.” [1]

Climate Change timeline – EXPLORE

The functional importance of small RNA is first described

Functionally important small RNA were first described in nematodes and published December 3, 1993. This begins the scientific understanding that not all RNA codes for a protein. It was not until 2001 that the functionality of micro RNA (miRNA), which are noncoding small RNA 18 to 26 nucleotides (nt) long, were understood, and to not be just confinded to the lower orders. [1, 2]

It was in 2005 that the regulatory function of miRNA’s begins to emerge. Gene expression is regulated by these non-coding miRNA’s, which in turn help regulate biological pathways – such as the immune system. This emerging field of gene regulation is know as epigenetics. [3]

Oklahoma City bombing – public attention to terrorism escalates – prelude to the Patriot Act

The Oklahoma City bombing was a “domestic terrorist truck bombing” of the Alfred P. Murrah Federal Building in Oklahoma City, Oklahoma, United States, killing 168 people and injuring 680 others. [1, 2]

Officials believed that “the bombing brought the issue of domestic terrorism to the forefront”, yet that may not be the true public opinion about the potential threat.

Prior to this event Senator Joseph Biden and Senator Tom Daschle were struggling to get their controversial Omnibus Counter-terrorism bills though US congress, as civil liberties would be violated. These bills did not get voted on, but later Joe Biden said this bill was the basis for the 2001 Patriot Act.

Ivermectin for human use first registered in Australia

In 1996 ivermectin under the trade name Stromectol by Merck Sharp Dohme was was first approved for marketing in Australia as 6 mg tablets indicated for the treatment of onchocerciasis (river blindness) and intestinal strongyloidiasis (intestinal thread worms) in humans. In 1999 the 3mg oral tablets were registered, replacing the 3mg.

Ivermectin comprise of two avermectin derivatives (B1a (90%) and B1b (10%)) which are excreted from Streptomyces averimitilis bacteria during their fermentation process. Avermectins are a class of highly active broad-spectrum antiparasitic agents that are structuralIy similar to the macrolide antibiotics but have no antibacterial effect. [1, 2]

Congress establishes Foundation of NIH Inc. (predecessor)

Established by Congress as the National Foundation for Biomedical Research, the Foundation was incorporated in 1996 as a 501(c)(3) charitable organization that raises private funding and manages public-private partnerships to support NIH’s mission.

In 1999, the Foundation’s name was changed to the Foundation for the National Institutes of Health (FNIH) to reflect its purpose more accurately which is to support the mission of the NIH to develop new knowledge through biomedical research. It has an independent board of directors of eminent scientists and pharmaceutical industry and philanthropy representatives. [1, 2]

In 1997 the foundation launched Clinical Research Training Program (CRTP) pilot program, which grew out of a realisation that the “medical and research communities are not producing clinical investigators at a sufficient rate to support the nation’s health needs”. Students “learn about the discovery and research process that may lead to better ways to prevent, diagnose and cure diseases”.

The founation was also involved with genomics and biomedical engineering, and on November 19, 1998, the foundation helped Fauci’s NIAD celebrat 50 years an important initiative as “infectious diseases are the world’s leading cause of mortality and the third leading cause of death in the United States.”

US corporate communication monopolies begin

On February 8, 1996, President Clinton signed, with a “digital pen“[1] the Telecommunications Act of 1996 , an “overhaul” of the 1934 Act. The goal of the legislation was to “let anyone” in to open markets up to more competition, promote innovation and lower prices. It created the FCC regulator but actually allowed industry giants to merge and monopolise corporate communication ownership. [2, 3, 4, 5]

By 2017 “six giant conglomerates control a staggering 90% of what media consumers read, watch, and listen to” (Comcast, Disney, Time Warner, 21st Century Fox, CBC and Viacom), compared to 50 in 1983. This consolidation of the media makes it easier to control the “trusted” narrative, and can be used to influence government policy and hide vested interests. [6, 7]

Is the real news coming from citizen journalists seeking the truth, or the “Mockingbird Media“?

Is the narrative controlled, and if so, who’s directing it?

Internet Archives launch first web page crawlers

On October 29, 1996, the San Francisco-based nonprofit Internet Archive began crawling and archiving the World Wide Web for the first time. Founders, Brewster Kahle and Bruce Gilliat invented a system for taking snapshots of web pages before they vanished and archiving them for future viewing. In 2001, the project was made accessible to the public through the Wayback Machine. At the time, the World Wide Web (WWW) was estimated between 1 to 10 terabytes in size. In 1996, they didn’t know who fast the WWW was growing or be able to predict how large it would become. – WATCH

Today the Internet Archive’s Wayback Machine is continuing to preserve important historical information both in web page captures, but also searchable screen captures of books, including historic medical journals, but also it is possible, at times, to recover videos which YouTube continuously delete. [1]

NIH assigns new Human Genome Research Institute

The US National Human Genome Research Institute (NHGRI) becomes an official institute in January 1997 when the US Department of Health and Human Services (HHS) Secretary Donna E. Shalala signed documents giving the National Center for Human Genome Research (NCHGR) this new name and can now share equal standing with the 27 other research institutes at the National Institutes of Health (NIH).

The initial NCHGR was established in 1989 to carry out NIH’s role in the International Human Genome Project. The genome sequencing endeavour was conceived in June 1985 at the University of California and launched in 1990, through funding from the NIH and Department of Energy. The international genome project concluded April 14, 2003.

That same year in 1997 the “NHGRI and other scientists show that three specific alterations in the breast cancer genes BRCA1 and BRCA2 are associated with an increased risk of breast, ovarian and prostate cancers.”

Universal Declaration on the Human Genome and Human Rights (1997)

Adopted November 11, 1997 by General Conference of the United Nations Educational, Scientific and Cultural Organization (UNESCO) at its 29th session was the declaration of the Universal Declaration on the Human Genome and Human Rights (1997). [1]

Seven years earlier in 1990 the sequencing of the human genome began.

US DOD Biodefense vaccine program begins with anthrax vaccine

On November 16, 1997 US Secretary of Defense William Cohen appeared on ABC’s television program ”This Week” and held up a 5 lb bag of sugar and told the world, if it were anthrax spores, it would be enough to take out half the population of Washington, D.C., an assertion later debunked by government experts. [1, 2, 3]

At the time diplomatic efforts were underway to return U.N. weapons inspectors to Iraq, which Saddam Hussein had blocked. Richard Butler, whose United Nations Special Commission (UNSCOM) was charged with dismantling Iraqi weapons said “in the absence of inspectors, Iraq could ferment new biological toxins “within about a week”. White House national security adviser Samuel R. “Sandy” Berger said “that U.N. inspectors cannot account for 2,500 gallons of anthrax “and “U.S. officials suspect Iraq has it on hand or could reconstitute it.”… Besides the bag of sugar, Cohen said “Iraq has missiles that can fly 3,000 kilometers”, implying they could be loaded with anthrax. [4]

The threat had been planted in the minds of the world, providing justification for the start of the Department of Defense’s (DOD) “biodefense” vaccine program, in the name of “protection” and “preparedness” against bio-terrorism.

On December 15, 1997 the anthrax vaccine program was announced by the Pentegon, marking the first time that American troops will receive mandatory, blanket, routine inoculations against a “germ warfare agent”. The program was a six-dose vaccine series spread over 18 months, plus annual boosters. The rollout began March 1998.

The vaccine was likely the cause of illnesss known as Gulf War Syndrome.

WHO/UNICEF: Stop Transmission of Polio (STOP) program begins

The Stop Transmission of Polio (STOP) program began in 1998 as part of the Global Polio Eradication Initiative (GPEI), to recruit and trains international public health consultants and deploys them around the world. [1, 2, 5]

“The global initiative to eradicate poliomyelitis by end of the year 2000 is the largest international disease control effort ever, targeted at completely eliminating an infectious disease from the face of the earth.” [3]

Today the STOP program has morphed into a program that focuses on all alleged vaccine-preventable diseases (VPD). The program is run by the US CDC in collaboration with the WHO and UNICEF. [4]

Global average temperature is quietly lowered by NASA form 15C to 14 C

Sometime in 1997, but definitely by January 18, 1998, the estimated average global temperature of 15 degrees Celsius (°C) was lowered to 14 degrees Celsius, which instantly made the average global temperature reach a “record high” of 14.40°C in 1997! [8]

NASA Goddard Institute’s director James “Jim” Hansen reported via email to Worldwatch this change, informing them 14°C was a “better base number” as documented in 2012 by American Thinker article “Fourteen is the New Fifteen].

Documents dated 1997, as reported in The American Thinker, that NASA quietly changed the “inconvenient” average global temperature of 15 degrees Celsius (°C) to a lower 14 degrees Celsius, as 15°C “did not support the allegations of global warming”. [1, 2, 3]

Prior to 1997, 15°C is documented as the average global temperature in multiple locations:

- In March 1988 the New York Times quotes Hansen as stating that the “30-year period 1950-1980, when the average global temperature was 59 degrees Fahrenheit [15 degrees Celsius], as a base to determine temperature variations.” – the zero point! [1, 2, 3]

- The first IPCC Assessment Report was released in 1990 which “underlined the importance of climate change as a challenge with global consequences and requiring international cooperation.” On page xxxvii in the Table of this 1990 report it lists the “Observed Surface Temperature” of Earth as 15 degrees Celsius – see Table on page 37 (xxxvii),

- and again in 1995 the IPCC Report on page 26 & 57 stated 15 degrees C. [5]

As reported in the 2012 American Thinker cited article, sometime in 1997 the average global temperature is stated to be lowered to 14°C, as shown in multiple locations, but JJanuary 18, 1998, NASA’s James Hansen changed the Goddard Institute’s estimated average global temperature from 15 degrees Celsius to 14 degrees Celsius

Today IPCC shows multiple graphs with a “zero point” but it is hard to find what temperature that point represents.

- But the in March 2020 the “global mean surface air temperature for that [base] period [1951-1980] was estimated to be 14°C (57°F)” not 15 as Hansen claimed in 1988! [4]

Bill Gates brand change: from computer geek monopolist to public health “philanthropist”

Who is Bill Gates and why should you care? A man who has gone from monopolising computer software to monopolising global health. [Part 1, 2, 3]

On May 18, 1998 US regulators “launched one of the biggest antitrust assaults of the century, accusing Microsoft Corp. of using its dominance in computer software to drive competitors out of business”. Microsoft lost the case against the government with a verdict handed down in November 2002. [1, 4]

Following appealing the decision, Microsoft settled “the case with the Department of Justice in November of 2001 by agreeing to make it easier for Microsoft’s competitors to get their software more closely integrated with the Windows operating system…hardly on the same level as a forced breakup”. [7]

Then from 1999, Gates began to reposition his brand from computer geek monopolist to public health and education “philanthropist” by forming the Bill & Melinda Gates Foundation (BMGF) which officially launched in 2000.

Rome Statute establishes the ICC

After a 5 week conference in Rome, Italy, on 17 July, 1998, diplomats signed a the Rome Statute, a treaty establishing the International Criminal Court (ICC), the day annually celebrated as World Day for International Justice. The statute entered into force on July 1, 2002. [1]

As of March 2019, 122 states including Australia were part of the statue, the USA, Ukraine, Russia and Israel are but 4 countries that have not signed the treaty. [2]

In brief the statute establishes the courts functions, jurisdictions and structure. The court investigates crimes where states are unable or unwilling to do so themselves.

Four core international crimes:

- Genocide

- Crimes against humanity

- War crimes

- Crimes of aggression

In Article 8 the ICC defines “biological experiments” under war crimes.

The Johns Hopkins Center for Civilian Biodefense Studies was founded

Established in September 1998, the Johns Hopkins University Center for Civilian Biodefense Studies was created to increase national and international awareness of the medical and public health threats posed by biological weapons, such as anthrax and smallpox, that terrorists can use to unleash mass destruction on a population.

Originally seeded with monies from the schools of Public Health and Medicine, the center then received funding Congress, the Alfred P. Sloan Foundation, the Robert Wood Johnson Foundation and a few private philanthropists.

- The founding director was Donald Ainslie Henderson, a longtime dean of the School of Public Health, who “was concerned about the nation’s lack of preparation in the event of a biological attack”.

- Then Dr Tara O’Toole served as director from 2001 to 2003.

By February 1999, the Center holds their first “response to bioterrorism” symposium.

The name of the group was later changed to the Centre for Health Security (CHS), a less military-like name, yet for decades to follow they continued to hold “war game” exercises, simulating responses to bio-terrorist attacks and outbreaks.

The outcome of the scripted simulations “usually ended in a need to control the populace, by which behavioral modification techniques are used to enforce cooperation” of the people. [1, 2, 3]

Timeline pages:

1800s | 1900-1945 | 1946-1979 | 1980-1999 | 2000-2015 | 2016-2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024